Why in the News?

Union Ministry of Electronics and Information Technology (MeitY) proposed amendments to Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 (IT Rules 2021) to check misuse of Synthetically Generated Information, including Deepfakes.

More on the News

- The amended Rules shall come into effect from November 15, 2025 and be called as Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Amendment Rules, 2025.

- Proposed amendments aim to strengthen due diligence obligations of intermediaries' particularly Social Media Intermediaries (SMIs) and Significant Social Media Intermediaries (SSMIs).

About Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021

- Originally notified in 2021 and subsequently amended in 2022 and 2023. They prescribe-

- Framework for regulation of content by online publishers of news and current affairs content, and curated audio-visual content.

- Due diligence obligations on intermediaries, including SMIs with objective of ensuring online safety, security and accountability.

- Defines SMIs and SSMIs as:

- SMI means an intermediary which primarily or solely enables online interaction between two or more users and allows them to create, upload, share, disseminate, modify or access information using its services.

- SSMIs mean a social media intermediary having number of registered users in India above such threshold as notified by Central Government.

Key Features of the proposed Amendments

Aspect | Details |

Defines Synthetically Generated Information (SGI) |

|

Due Diligence in Relation to SGI |

|

Enhanced Obligations for SSMIs | It requires SSMIs to-

If they fail to comply, the platforms may lose the legal immunity they enjoy from third-party content. |

Senior-level Authorisation | Any intimation to intermediaries for removal of unlawful information can now only be issued by-

|

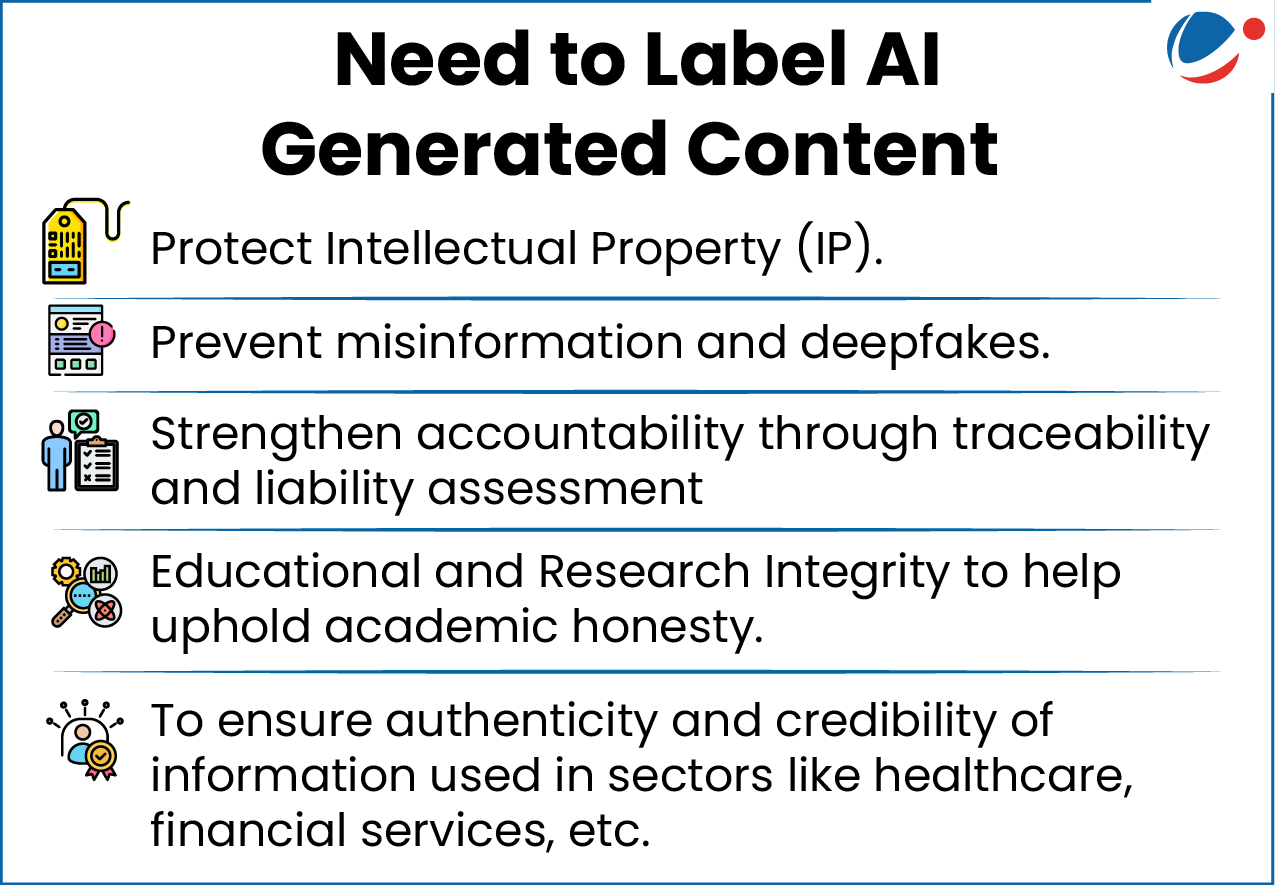

Challenges in identifying AI Generated Content

- Lack of benchmarks: Existing AI detectors have high error rates due to lack of standardized benchmarks, thus often leads to False Positives (flagging human content as AI) or False Negatives (failure to identify AI-generated text in document).

- Lack of capacity: The sheer volume of AI-generated content exceeds the processing and storage capabilities of most detection systems. Further, scaling up detection system will pose financial challenges.

- Anonymity: AI-generated content/deepfakes can be made anonymously or housed on foreign servers. Further, lack of unified laws or regulatory mechanism hinders cross-border verification and traceability.

- Imperceptibility: GenAI (Generative AI) generated content blend seamlessly with human created content, making it challenging for detection systems to identify.

- E.g., Tools like Midjourney, DALL-E, and Stable Diffusion can generate hyper-realistic images that are often difficult to detect as AI-generated.

- Balancing Innovation and Privacy: Detection mechanisms often raise privacy concerns due to metadata tracking. Over-regulation could constrain AI innovation while under-regulation risks unchecked spread of misinformation.

Initiatives taken to tackle DeepfakeIndia

Global

|

Way Forward

- Digital framework: A durable solution for AI content detection can be developed based on three pillars:

- Establishing a digital provenance framework, similar to Aadhaar, embedding invisible yet verifiable signatures to authenticate content.

- Implementing tiered accountability that assigns responsibility based on the role and influence of platforms managing synthetic media.

- Promoting AI literacy to empower citizens to recognize manipulation.

- Governance Architecture: Develop regulatory structures, standardized technical protocols, and robust oversight mechanisms to strengthen AI content detection, while balancing privacy and ethical considerations.

- Watermarking: AI watermarking can provide a solution by embedding indelible markers into AI-generated content, serving as a digital signature that attests to the content's origin and integrity.

- E.g., China's mandatory AI labeling rules.

- Establish Global standards for AI-generated content detection: Work towards aligning domestic frameworks with international benchmarks and periodically review policies to ensuring robust and adaptive governance in the AI ecosystem.

- Multi-stakeholder approach: Adopt a multi-stakeholder approach by actively engaging government bodies, industry representatives, academia, and civil society in consultations to identify and share best practices for AI content detection.

Conclusion

As India steps into the era of AI governance, harmonizing domestic regulations with global standards, fostering innovation-friendly safeguards, and enhancing public AI literacy will be crucial to building a safe and trustworthy online space that upholds both creativity and integrity.