Introduction

With digital information growing exponentially—from just 1% in 1986 to 98% by 2013—personal data has emerged as the new gold of the 21st century. This transformation has fueled the rise of Surveillance Capitalism, a system where human experiences and behaviors are harvested as raw materials for profit. This shift, led by tech giants like Google, Meta, and Amazon, raises profound ethical, social, and regulatory concerns about privacy, autonomy, and democratic accountability.

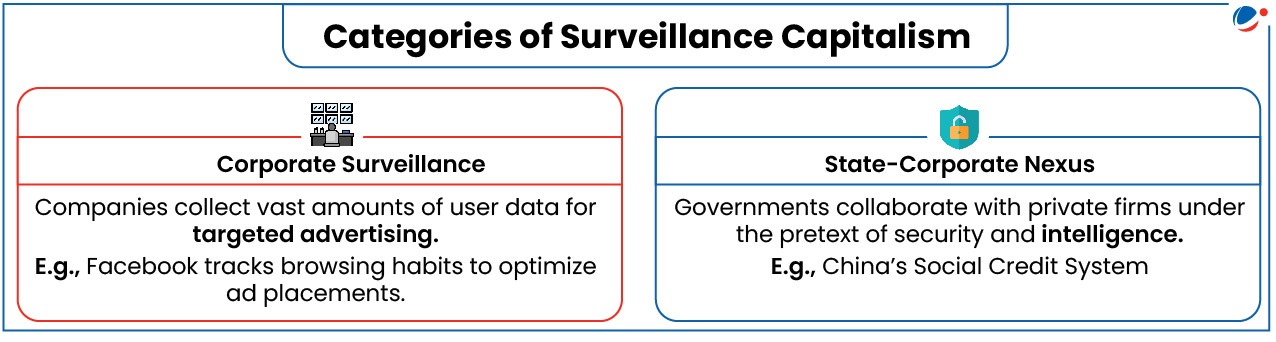

What is Surveillance Capitalism?

- Definition: It is an economic system where private corporations (e.g., Amazon, Alphabet, Meta, etc.) systematically collect, analyze, and monetize personal data to predict and influence human behavior for profit (e.g., targeted ads, pricing, insurance decisions).

- Mechanism: It operates through –

- Data extraction: Platforms like Google, Facebook, and Amazon track user activities, from search queries to purchase history.

- Predictive analytics: AI and algorithms analyze behavioral patterns to anticipate user preferences.

- Influence techniques: The insights gained are used to shape consumer choices, political opinions, and even emotions through targeted ads, dynamic pricing, and behavioral nudging.

Traditional Capitalism vs. Surveillance Capitalism

Feature | Traditional Capitalism | Surveillance Capitalism |

Resource base | Labor and natural resources (coal, steel, etc.) | Personal data extracted from users |

Value creation | Mass production of goods (e.g. Ford's assembly line) | Behavioral modification through digital nudging |

Profit model | Selling physical products or services | Monetizing data via targeted advertising, AI-driven pricing |

Example | Steel mills, automobile factories | Google Ads, Amazon recommendations |

Ethical Implications of Surveillance Capitalism

- Manipulation: Algorithms exploit cognitive biases to shape user decisions unconsciously.

- E.g., YouTube's recommendation system maximizes engagement by promoting emotionally charged content.

- Privacy Erosion: Data is often collected without proper consent, leading to mass surveillance.

- E.g.: In 2021, Clearview AI was stopped in France from collecting Individual's data without legal basis.

- Commodification of Personal Data: Sensitive data, once private, is now bought and sold like a commodity.

- E.g., In 2018, Sleep apnea machines in the U.S. secretly sent usage data to insurance firms, affecting coverage.

- Unfair Commercial Practices: Lack of transparency about data usage.

- E.g., Italy fined Facebook €7 million in 2021 for misleading users about data collection.

- Democratic Violations: State and corporate surveillance weaken citizen autonomy.

- E.g., India's IT Rules (2021) blur the line between national security and government control.

- Mental Health Risks: Exposure to curated content designed to maximize engagement can cause stress and anxiety.

- E.g., Social media algorithms prioritize content that triggers anger and fear, amplifying political polarization

Challenges in controlling the Surveillance Capitalism

- Regulation: Existing laws have failed to dismantle the core practice of commodifying data. Tech giants often lobby against stringent oversight, as seen in their resistance to antitrust measures.

- Technology: The rapid evolution of AI and IoT (Internet of Things) outpaces regulatory frameworks.

- Corporate-State Collusion: The alignment of corporate and state interests, e.g., data sharing with intelligence agencies reduces public scrutiny, complicating accountability.

Efforts to regulate Surveillance Capitalism | |

Global | India |

|

|

Way Forward

- Stronger Regulatory Frameworks: Enact adaptive laws with clear accountability, regular audits, and severe penalties to deter misuse. E.g., India should strengthen the DPDP Act by limiting exemptions and ensuring judicial oversight.

- User Empowerment: Promote data literacy campaigns and enforce transparent consent mechanisms, enabling individuals to reclaim agency over their data.

- Antitrust Measures: Break up tech monopolies to reduce their unchecked power, as being discussed in the USA, ensuring fair competition and innovation.

- Global Cooperation: Harmonize international standards to prevent data exploitation in less-regulated regions, fostering a unified response to a borderless challenge.

- Ethical Technology Design: Encourage tech firms to prioritize privacy-by-design, reducing surveillance incentives at the development stage.

Check your Ethical AptitudeYou are the CEO of a mid-sized Indian tech startup that has developed an innovative mobile app designed to improve financial inclusion. The app uses AI algorithms to analyze users' online behavior, spending habits, and social media activity to offer personalized micro-loans and financial advice to underserved populations, such as rural farmers and small vendors. Since its launch, the app has gained popularity, serving over 500,000 users and attracting significant investment from venture capitalists. However, a recent exposé by a news outlet revealed that your company has been sharing anonymized user data with third-party advertisers and insurance firms to generate additional revenue, a practice buried in the app's lengthy terms of service that most users did not fully understand or consent to. You are at a crossroads. Continuing the data-sharing could secure the company's financial stability and fuel expansion, but it risks legal action, loss of user trust, and employee morale. Stopping it might jeopardize the company's growth and investor confidence, potentially undermining your mission to serve marginalized communities. Based on the above case study, answer the following questions:

|